Geo-targeted proxies let you view your website as users do across regions. Learn how developers, QA teams, and SEO experts test localized content, pricing, and UX for a consistent global experience.

How to Use Rotating Proxies for Large-Scale Web Scraping

In the digital marketplace, brand protection is no longer optional—it’s essential. This guide on proxy-based brand monitoring explains how companies use residential and rotating proxies to uncover counterfeit listings, unauthorized resellers, and brand misuse across online platforms. Learn how proxy networks empower global monitoring, compliance, and enforcement with unmatched accuracy and anonymity.

What Is Proxy-Based Brand Monitoring?

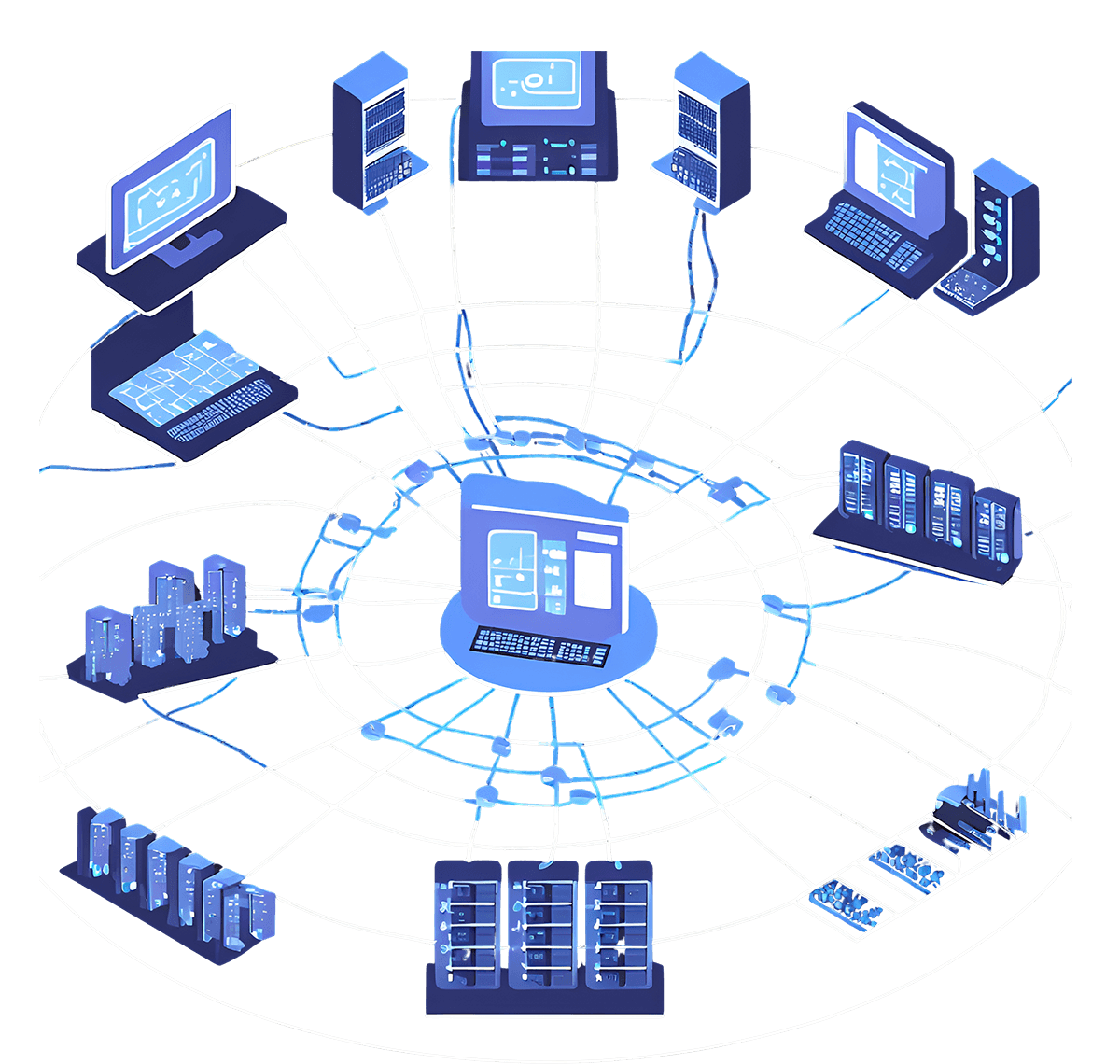

Proxy-based brand monitoring is the process of tracking and verifying your brand’s presence across the internet using proxy servers. These proxies mask your IP and location, allowing your monitoring tools or analysts to view e-commerce sites, marketplaces, and social platforms as real users from different regions. By using residential proxies—IPs assigned by ISPs to real devices—businesses can detect hidden or region-specific counterfeit listings that standard monitoring tools might miss.

Why Proxies Are Essential for Brand Protection

- Unbiased Market Visibility: Detect counterfeit or unauthorized listings that appear only to certain regions or IP addresses.

- Multi-Regional Access: Inspect global e-commerce platforms like Amazon, eBay, Alibaba, and Shopee using local IPs from different countries.

- Bypass Anti-Bot Systems: Residential proxies appear as real users, ensuring access to data without triggering site blocks or CAPTCHAs.

- Discreet Investigations: Monitor competitors or potential infringers anonymously to gather evidence for enforcement.

- Data-Driven Enforcement: Collect structured evidence from product listings, reviews, and seller profiles for legal action or takedowns.

How Proxy-Based Brand Monitoring Works

When monitoring teams or automated systems access websites through proxies, each request is routed through an alternate IP address, making it appear as though a local user is browsing from that country. This helps detect regional counterfeit listings or resellers targeting specific markets. Rotating proxies add scalability, allowing hundreds of requests across various marketplaces without getting blocked—enabling consistent, automated brand intelligence gathering.

Key Applications of Brand Monitoring with Proxies

| Use Case | Description | Who Benefits |

|---|---|---|

| Counterfeit Detection | Identify fake or unauthorized products listed across marketplaces using geo-specific proxies. | Brand Protection & Legal Teams |

| Unauthorized Reseller Tracking | Spot gray-market sellers offering genuine products outside approved channels. | Sales & Compliance Departments |

| Ad & Trademark Monitoring | Verify brand usage in online ads, banners, and sponsored content globally. | Marketing Teams, Agencies |

| Marketplace Intelligence | Collect competitive insights from product listings, reviews, and pricing strategies. | Product & Strategy Teams |

| Legal Evidence Collection | Document violations with IP-based proof to support cease-and-desist actions. | Corporate Legal Counsel |

Benefits of Using Residential Proxies for Brand Monitoring

- Real-User Simulation: Residential IPs mimic genuine buyers, ensuring complete access to geo-restricted listings.

- Scalable Monitoring: Track thousands of listings simultaneously across multiple sites and regions.

- Accurate Data Collection: Retrieve localized content versions and hidden marketplace listings.

- Enhanced Privacy: Protect internal IPs and prevent retaliation from bad actors or sellers.

- Ethical & Legal Compliance: Monitor public data responsibly, respecting site terms and privacy laws.

Step-by-Step: Setting Up Proxy-Based Brand Monitoring

- Define Your Objectives: Identify what to monitor—counterfeits, unauthorized resellers, ad misuse, or brand mentions.

- Select a Proxy Type: Use residential proxies for authenticity or rotating proxies for large-scale automation.

- Integrate Monitoring Tools: Configure your brand protection or scraping platform with proxy credentials (

host:port+ authentication). - Set Regional Targets: Choose specific countries or cities where brand abuse is most common.

- Review Data & Act: Aggregate results, flag suspicious listings, and trigger enforcement or takedown processes.

FAQs – Proxy-Based Brand Monitoring

Why use proxies for brand protection?

Proxies allow businesses to monitor marketplaces from multiple regions and devices anonymously, uncovering region-specific counterfeit listings and unauthorized sellers that may not appear otherwise.

Are residential proxies better than datacenter proxies?

Yes. Residential proxies provide authentic IPs assigned by ISPs, giving access to restricted listings and reducing detection risk compared to datacenter IPs.

Can proxies help automate brand monitoring?

Absolutely. Rotating proxies enable automated tools to scan thousands of listings, ads, or web pages simultaneously without triggering IP bans.

Is proxy-based monitoring legal?

Yes, when limited to publicly available data and compliant with local regulations such as GDPR and CCPA.

Which regions can be monitored using proxies?

With global proxy coverage, you can monitor marketplaces and domains across North America, Europe, Asia, and emerging markets.

Conclusion

Proxy-based brand monitoring empowers companies to stay ahead of counterfeiters and unauthorized resellers by offering global visibility and secure, anonymous data collection. By integrating residential and rotating proxies, businesses can ensure brand integrity, protect intellectual property, and act quickly against online threats—no matter where they appear.

Next Steps

Start Monitoring with DynaProx — simulate real-user access from popular U.S. states using unlimited U.S. residential proxies. Validate the accuracy of your content, SEO, and UX for your global audience.

Explore more guides on SEO proxies, scraping proxies, and geo-testing automation on the DynaProx Blog.

You might also like:

Full Guide on SEO & Scraping Proxies (2025)

Discover how SEO and scraping proxies help you collect search data, analyze competitors, and automate web extraction safely. Learn proxy types, setup steps, and best practices for scalable, block-free SEO performance.

Proxy Rotation Strategies: Avoiding Detection & Bans

Stop getting blocked. Learn how to rotate proxies effectively to bypass IP bans, evade bot detection, and automate at scale—complete with tools, code examples, and expert strategies using Dynaprox’s unlimited residential proxies.